Chicago Crime Analytics Using Mage, dbt, PostgreSQL, and Power BI: An End to End Data Pipeline Project

Part 1: Analytics Infrastructure Setup:

Projects are a great way to help improve your technical skills for data engineering, analytics engineering, or data analytics. They also bring value to your job search process when you can showcase them in your GitHub or portfolio webpage. And when it comes to interviews, a lot of people say they can extract data, move it from its source location, transform it, and load it into another location. In your interviews, they are going to say, "prove it." My goal here is to help you prove it.

Medallion Architecture

Medallion architecture is a data management framework used in modern data warehousing, which organizes data processing into three distinct layers: bronze (or raw), silver (or refined), and gold (or aggregated). In this architecture, data moves through these layers, becoming progressively more refined, usable, and valuable. Starting with the raw, unprocessed data in the bronze layer, it is cleansed and transformed in the silver layer, then finally aggregated or summarized into business-level insights in the gold layer. This structured approach not only enhances the clarity and usability of data but also optimizes the ETL process by segregating different types of data transformations. It's a fantastic setup for ensuring that data is systematically processed, making it especially suitable for analytics and business intelligence tasks.

Data Pipeline Architecture

As you can see, based on the description of the Medallion Architecture, we are doing something similar with our technical stack. We will extract data from the Chicago Data Portal into the Mage data pipeline. From there, we will perform transformations on the data and push those transformation layers to our PostgreSQL database for data storage. Finally, we will connect our PostgreSQL database to Power BI where we can visualize our data for reporting.

Tech Stack Setup

This week we are going to handle some project pre-requisites. We will set up everything we need for our project’s data stack, obtain API keys, and create a new pipeline in Mage.

Get API Access

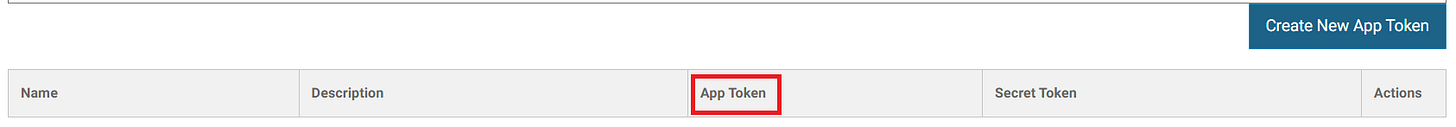

Accessing the Chicago Data Portal API requires you sign up in order to get an API App Token. Sign up using the link above and then follow the rest of the instructions for an API key:

Login or create a new username and password

Select the Create New App Token button at the bottom right of the page

Give your App Token a name and description and hit save

Your New App Token will appear under the App Token column

Docker / Docker Desktop

Next we are going to get access to Docker or Docker Desktop. We will use this to install Mage into a Docker Container. Docker is available for Mac, Linux, and Windows (with WSL-2 or Hyper-V). Although not a requirement, to follow along in this tutorial, you will need Docker to download Mage.

Before downloading Docker on a Windows Machine make sure to download WSL 2. Check out the docs and download site for download instructions

Due to docker having multiple different download instructions, it would be best to follow the Docker documentation on installing Docker.

https://docs.docker.com/get-docker/

PostgreSQL Database

PostgreSQL is an industry standard database and its Graphical User Interface (GUI) tool, PGAdmin4 is very user friendly. Take the following steps to download PostgreSQL and PGAdmin4:

Begin to download PostgreSQL here

Select the download option for your operating system

Select the download the installer link

Select the download link under the Windows x86-64.

When the install wizard starts click next

The setup wizard will appear. You can change the installation directory or use the default directory and click next.

Select the components you need. The default will be all 4 components and then select next.

A second data directory will appear click next if you want to use the default directory

Choose a password in the setup wizard for the root user then click next.

Choose the default port number and click next.

Select next for the default locale

Select next for the setup pre-installation summary.

Select next when PostgreSQL is ready to install and the install will begin.

Select finish in the Setup pop-up wizard to complete the install.

Power BI

Power BI Desktop is a free Data Analytics and Visualization tool offered by Microsoft. Downloading Microsoft Power BI requires the following:

Go to the Power BI Desktop download site

Scroll down to the “Create a data-driven culture with BI for all” section and click the free account button

Select the Power BI Desktop drop down and click the “get the app” button. This will bring you to the Microsoft Store

Click the Open Microsoft Store button from the popup

Once you are in the Microsoft Store click “Get” under the Power BI logo to begin the download

When the installation is complete click the “Launch” button to start the Power BI application

You will need to have a Microsoft account or create one to log into Power BI

Follow any additional account creation and login instructions from the application

If you are working on a Mac you may want to work in Tableau or any other Business Intelligence Tool of your choice.

Mage

Mage is an open source data orchestration tool that helps you transform and integrate data. Their blocks (notebook-style code editors) allow you to write and execute Python, SQL, and R code for data processing tasks. Mage also has an integration with dbt Core which we will rely on for this project.

Once you install docker you can then install Mage into the Docker container.

Docker Run in Windows Command Line

docker run -it -p 6789:6789 -v "%cd%:/home/src" mageai/mageai /app/run_app.sh mage start chicago_crimesIf you are installing from a Mac or Linux Machine, need additional help, or want to install Mage a different way, check out their documentation.

After running the command above from PowerShell or any Microsoft CLI you can open Mage from the Docker Desktop GUI by clicking the link under the Ports section. It should look like this 6789:6789. If you are not using Docker Desktop open the Mage overview page at http://localhost:6789.

Create a New Pipeline in Mage

Click the new pipeline button and then click Standard (Batch) option located at the top left of the GUI to begin the pipeline.

Either use the name provided by Mage or delete it and customize a name for your pipeline in the popup screen provided. You can give the pipeline a description if you want.

Hit create and you will be taken to the Pipeline editor GUI in Mage.

Closing Mage

If you are using Docker Desktop under the actions column click the square stop button to stop the Docker container. You can close your Mage GUI from localhost://6789 either before or after stopping the docker container and your work will be saved.

If you are running Docker from the terminal or a CLI you can close the container by entering Ctrl + C and then typing exit and hitting enter. This will exit you from your Mage instance and the Docker Container.

Conclusion

This week you successfully created a local data and analytics engineering infrastructure that will allow you to move data from an API source to your Business Intelligence tool. Now that you have downloaded and installed Docker, PostgreSQL, Mage and Power BI you have a local, full stack data analytics and engineering infrastructure. Next week you will create a new dbt project in Mage, extract crime data from the Chicago Data Portal, and build the first transformations in your data pipeline.

Amazing post Cole! What if I install Mage using pip and the command line? I haven't used docker and know nothing about it.

Will I be able to follow along this way?